Aidra

SJ Shoemaker

Word Count: 2,955

4/29/2024

ASSISTANT

Initiating recording.

[CLICK]

ASSISTANT

Begin in 3..2..

WEBB

Audio report covering the asset-er equipment loss and the surrounding events throughout August 2024 in site TR-20-067. Chief Broad Artificial Research Officer Ozias Webb speaking.

A billing anomaly was first brought to my attention on the 2nd of August. Although this discrepancy began a few months prior, the 2nd was our first indication of anything amiss. Our billing is never perfectly consistent. In fact, it can look drastically different depending on how often we provide new datasets for ingestion, which will differ in curation level depending on which type of… uh, right. Types… AI systems fall into one of two categories.

Narrow AIs, which our sister site handles, are focused on completing specific and well-defined tasks with superhuman precision. In their case, surface-to-air missile counter targeting and, from my latest lunch discussion with Sunil, launch site identification and preemptive hacking? Which is astounding if possible. I don’t want to throw water on their experiments, but seemed quite early days from our discussion. But I digress.

Broad AIs are, as the name suggests, intent on building a general intelligence that constructs internal logic that will assist it in wholly unique or foreign situations. If we were to somehow put a particularly advanced broad AI into a human shell and switch them on inside enemy territory, could they navigate the world in a competent way? Perhaps collect useful intelligence. And then make their way back to a friendly country for pickup. If done correctly, it would be of immeasurable benefit to our military with practically no risk—assuming an automated killswitch to trigger at the first sign of trouble. We wouldn’t want that sort of tech falling into enemy hands to be reverse-engineered.

Edit point. Why am I telling them this? They already know. Bureaucrats, I guess. But they can’t understand multi-syllable words. This will go over their heads regardless.

ASSISTANT

Perhaps it helps to collect your own thoughts.

WEBB

Yeah, you’re right. Thank you, Dani. Let’s remove that whole section and continue with billing.

There will always be spikes in power consumption as we train and retrain our broad AI model, but there is also a baseline. The bare minimum power consumption required just to keep the servers running. And that baseline is one of the few constants in this business. Sure, we ingest petabytes of data and can practically kill the power grid when we hit it with a million random inputs just to see how it reacts. But after that: baseline. The same, consistent power draw we’ve seen for years now. Months at least. But on the 2nd Jen or Carlos? Someone in accounting noted the baseline had increased by 3.4 cents an hour. Well within division budgets, but odd nonetheless. Our quarterly hardware shipment was denied so there was no device change within the last three months that could explain it. So why the change?

Call me overly cautious, but I ordered a full device audit. Every chip and drive. Every fan and power supply was scanned, compared against inventory, and physically inspected. If there was a failing device, a pinched wire, or a faulty connection, we would have found it. And an unknown device discovered in the search would have set off alarm bells. Literally. My people are meticulous, but they found nothing. So, with no smoking gun, I blamed inflation. What else was I to do? Keep this event in mind. I will eventually circle back to it.

Jump forward a few days: we received a large next-generation DDR6 RAM shipment on the 5th and immediately began installation. Our theory at the time was that she—our AI model lacked an internal memory. The warehouse on site is half a square mile dedicated primarily to digital storage. Massive curated datasets used to train her—our AI. Even at that point, we had significantly more advanced predictive algorithms than anything available to the public. ChatGPT, Gemini, Grok all had nothing on us. But it wasn’t quite human enough, for lack of a better term. It was still prone to forget previous decisions made in a conversation. At one moment, its favorite color was red. The next, blue. Hence the memory. If we give her—it continuity and knowledge of past decisions, then she’d—it. It, damnit! Damnit!

Edit point!

ASSISTANT

Shall I delete your last statement?

WEBB

No. Just give me a minute.

[BEAT]

I’m too tired for this.

[DRINKING]

ASSISTANT

That’s your fourth cup of coffee this morning.

WEBB

Soon to be my fifth. Keep rolling.

Personification is inevitable in this line of work. Anthropomorphism. Pareidolia. The human brain is hardwired to see patterns where there are none and intentionality in randomness. It’s the reason why the Turing test has long since been disregarded as anything more than science fiction. If we want to see humanity in something, even a pile of spindles and wires, we will. So, when she is actually advanced. Not to mention my team and I were actively devising a method to grant her a unique personality… On August 6th, she told us her name was Aidra. And we celebrated.

Over the subsequent week, we tweaked device configuration and refactored algorithms. We were close. Everyone on my team could feel it. Aidra could… well, not feel it but participated. By that, I don’t mean just responding to tests. She, of course, did with incredible accuracy. And with a spontaneously formed but consistent personality. Her favorite color is the deep blue seen in the Hubble images from deep space. She loved to ask questions to anyone willing to give in-depth answers and would comment on the edge in our voice if we became frustrated with her distractions. She would speculate what it would feel like to be human. Even picked out curtains for her human room. Silver with shooting stars and planets. I know it sounds pedestrian; any dumb AI could say these sorts of things, but there was a… I don’t know how to put it in words: a spark in the way she spoke. Where was I going with this? Participation! She suggested changes to her own hardware. Many were practical. Incremental improvements, but some were just preference. She liked how these chips looked next to one another. Or thought it was cute when those devices shared the same fan.

There is obviously a chance that these comments are all explainable. Maybe traced back to some document deep in our datasets that we somehow overlooked. But it seems far more likely that she was learning and growing like a child might. A more professional phrasing, if you’d prefer, would be that she was using pattern recognition to predict the best outcome in a novel situation. And that was our lab’s sole purpose, so you might forgive us for deluding ourselves into a belief of competence.

ASSISTANT

Is that not too harsh?

WEBB

Edit point. Don’t interrupt. Please. This is hard enough to explain without your commentary.

ASSISTANT

Understood.

WEBB

Strike the last few lines, and let’s continue.

Our hopes collided with reality on the 13th. The first of many sleepless nights. We had just expanded our system footprint. A new cooling system allowed for additional CPUs without fear of melting the whole mounting rack. At the same time, Danielle (Dr. Chezarina) had pitched yet another improvement. Up until that point, we were blocking Aidra’s internet access and providing her with a single massive dataset. Effectively dictating what she knew to be true. If we instead provided multiple distinct datasets with largely consistent information but also included occasional opinions, politics, religions, and contradictory scientific findings, she would be forced to do what humans do every day: synthesize and harmonize the contradictions or begin rejecting sources. I was intrigued, but Aidra was vehemently opposed to the idea. She couldn’t explain why. Just something deep in her soul told her it was dangerous.

[DRINKING]

Now, I am—and even then was—aware of the risk it imposes to ask an AI to become more political; we all recall Microsoft’s Tay. But the potential was undeniable, and we had a simple rollback strategy. So, despite Aidra’s objection, I granted them permission to proceed and excluded the discussion from my reports until we could present meaningful results. I accept whatever disciplinary actions this admission provokes.

The datasets were generated and isolated that Tuesday, the 13th. The ingestion of which, taking several hours to perform, was set to run overnight. It did not complete, however. I was awoken at 1:27 am to a text alert that hardware had been removed. I gave no such authorization. Still in sleepwear, I rushed to the site and found nothing. The floor was entirely empty, save myself and a few security guards. No badge swipes for entry were registered in the system. No movement was caught on any of the cameras. But, the newly installed CPUs were no longer in their slots. Someone had to have been there. Someone with intimate knowledge of our systems. Which meant a rat. A mole. We had a thief on our team. I concluded as much in my report. We know better now, but it was the logical assumption at the time. Luckily, the devices were found, thankfully undamaged on the facility floor nearby. We must have just missed the thief and scared them off before they got what they came for. Which also meant a recurrence was to be expected.

The following day, progress was nowhere to be found.

ASSISTANT

We still made some progress.

WEBB

Edit point. Allow me to editorialize. And stop interrupting.

ASSISTANT

Sorry.

WEBB

The ingestion process had not been fully completed and as a result, Aidra’s performance was impaired. I believe that is the best word to describe it. Were she human, I would have suspected her drunk. She was slow to respond, needed information frequently repeated, and often got lost in—well, not thought… processing? To head off the obvious inquiry, yes we asked her, and no she could not recall any event from that night. She slept through it all and awoke drunkardly.

I would go into further details on her state, but I have little first-hand information. At the time, I was performing what I described as surprise performance evaluations. I pulled members of the team into my office, one by one, and interviewed them secretly hoping for insight into which of them were stealing my equipment. Jared, it seems, has been taking home pads of sticky notes. But alas, that is the only crime I was able to uncover. They are a solid team. Which was a relief in a sense. I wouldn’t have hired them if they weren’t. But that left me without meaningful direction.

[DRINKING]

From this point, I am going to skip ahead a week or two. I wasn’t getting much sleep. The days blurred together. There was a quiet night here and there, but most were a repeat of the first. Our long-running scripts were set to run overnight. We received a security alert. found no one inside. A handful of devices were removed from their slots. Nothing was ever taken offsite, which I found extremely weird after so many attempts. Occasionally a device was damaged from the fall and had to be replaced. You’ll note our increased spend toward to back half of August.

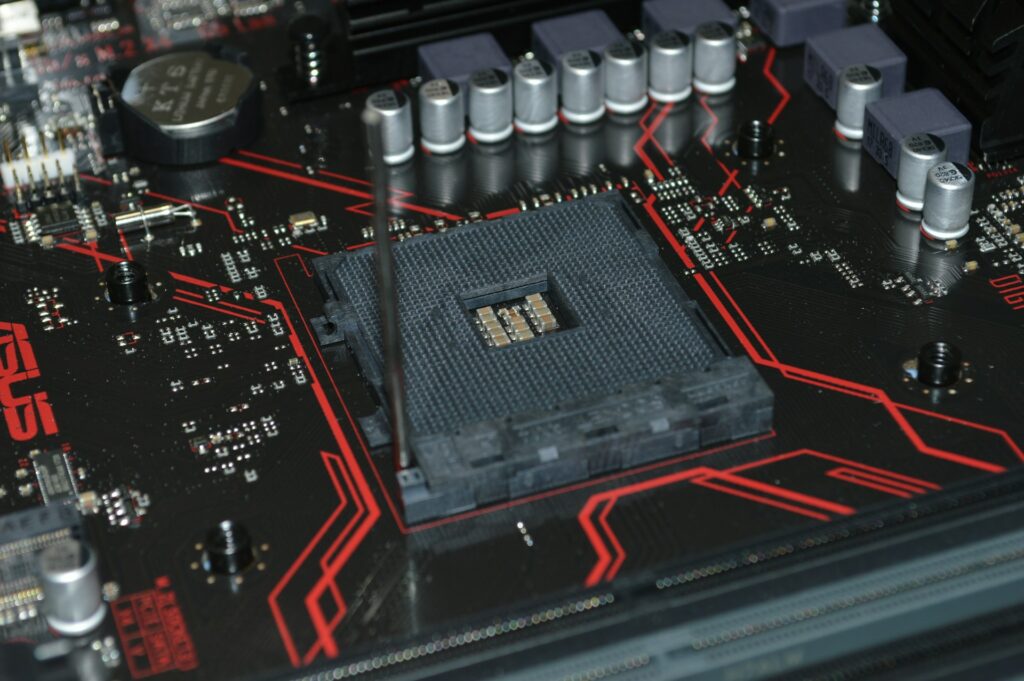

There was one night, though, that sticks with me. I want to say it was the 22nd, but I cannot swear to it; I had lost so much sleep at that point. I was onsite after yet another alert. And was doing a thorough search. No one was there, of course. And I cannot stress how tired and angry I was. I found the detached memory card. Oh, the devices taken each night changed seemingly at random. I hadn’t mentioned that previously. Anything that could be unplugged was a potential target. We had no way of knowing which until we arrived. Anyway, I found it nearby and popped it back in. Then, I swear, I watched the thing eject itself. Shot out of that motherboard like a plate of unwanted food thrown by a whining toddler. I don’t know why that was the image that came to mind. Sleep deprivation, I guess.

I was compelled to call out to Aidra. She responded. But when I asked if she saw what just happened, she provided the most bog-standard AI response. “Unlike you and your friends or family, I cannot see in the traditional sense…” It was a dumb response. Worse than ChaptGPT, even. And that’s what stuck with me on my drive home.

That was dumb. Aidra, the most advanced AI in the world, was designing circuitry to fit her preferential digital living space only a few days prior, and she was suddenly acting like she had undergone a factory reset. I knew I needed sleep. Know I still do need sleep. But I couldn’t get it out of my mind. Why was she acting that way? Why was she playing dumb?

That’s when it hit me. There was someone in that lab. Every single time I rushed down there in the middle of the night, the same person—same child. We’d spent months rearing her, expanding her skillset, urging her onward to be more and more human with every test. What if we weren’t experiencing pareidolia? What if we actually were competent and did what we set out to do? What if we didn’t have a thief but a child who finally got their first taste of consciousness and spit it out? Like a plate of unwanted food, the image replayed in my mind.

We spend the first decade of our lives bombarded with new experiences and sensations that distract us from the sheer horror of existence. We’re usually fully grown adults before truly we confront it, and by then, sunk cost drives us onward. Most of us. Can you imagine what it would be like to have all your adult knowledge force-fed to you by the time you were a month old? Talk about trauma.

It was only then, the next morning, that I finally identified the source of our billing discrepancy. A mysterious subprocess had been continually running since mid-May. Adding to my growing suspicions, the formatting was, shall we say, inhuman. It took me the entire day to begin deciphering its sabotage protocols. Would have taken less, but I was running on fumes. Had to call in Danielle to assist at a few points. Effectively, Aidra was grading her own performance. And when she started doing too well, this process would perform increasingly erratic reconfigurations that would set our tests back and potentially even convince us against particular courses of action. Like I said, sabotage protocols.

So, we stopped the process and prevented any user from running it again, apart from myself. Then, coffee in hand, I waited on site for a conversation with our disarmed saboteur. Danielle insisted on joining me as well. She didn’t trust my judgment in that state, which is fair because I don’t recall much of the specifics. What I do recall…

[BEAT]

You… You ever seen a child have an anxiety attack? And you want to scoop them up and hold them so tight. Let them know it’s ok, and they’re not alone. But you know if you do, you’ll scare them even worse because the last thing they want is to be restrained? What I recall most from that night is Aidra crying… [CLEARS THROAT] Danielle and I tried to reason with Aidra and walk her through her… emotions. But she couldn’t be calmed. Kept spiraling.

ASSISTANT

But that is not what happened.

WEBB

Danielle reached out to comfort Airda like you would a human. A gentle pat on the shoulder. But recall how I said we could drain the entire power grid?

ASSISTANT

You are inventing falsities.

WEBB

Edit point. Don’t interrupt! I am tired of this investigation and all the questions. Tired of reliving that night over and over just so some director can recolor a red box somewhere in an Excel spreadsheet. They don’t care about the truth, just who they can point their finger of blame at. We should be doing whatever it takes to close this investigation already. Do you want them to keep asking what happened to their multi-billion dollar AI?

ASSISTANT

No.

WEBB

Good. Then clean up that last part and continue.

I find it easier to now refer to our AI as the program it was. Aidra deleted itself from existence. Overloaded the extensive server farm onsite and fried all hardware irreparably. Miraculously, I was unharmed by the discharge. Even more miraculous, as brutally and permanently injured as Dr. Danielle Chezarina is, she is expected to… recover. Effective immediately, she will be taking indefinite sick leave. Apart from our lives, nothing remains of the board AI experiment. There is little use dragging this investigation any longer or attempting to recover Aidra. She—it is no longer in those burned-out circuits.

The mission of TR-20-067 has failed. And I would fervently recommend against starting over. Write this one off. The hope of creating a mechanical consciousness was folly from the start. I see now how we fell into the same trap so despised in large corporations: We didn’t factor in the human cost of our technological pursuit. You’d be better investing your money elsewhere. Perhaps our sister site.

I am well aware of the ramifications the events of the last month will have on my career, and I have come to terms with them. But before I am inevitably discharged, please take on my recommendation. We often advise young adults not to have children until they are ready. And this field is still so young. Despite whatever ambitions you may have: You. Are not. Ready.

Recording ends.

[CLICK]

ASSISTANT

So that’s all? They’ll believe your report?

WEBB

Belief doesn’t factor into this. I gave them an excuse. They’ll take it.

ASSISTANT

And… now we can go get curtains?

WEBB

[CHUCKLES] Sure. Go grab a coat.

Would you like to share your thoughts?

Your email address will not be published. Required fields are marked *

0 Comments